Latin hypercube sampling with weight Pc#

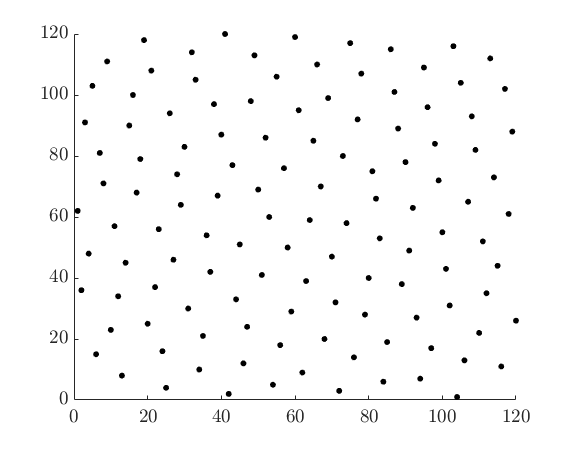

All computations were carried out on a HP Spectre X360 X64-based PC with 16 GB RAM using MATLAB 2017b. The errors and the number of model parameters (when necessary) are then used to calculate performance metrics, which are MaAE, RMSE, Akaike Information Criteria, Bayesian Information Criteria, R-Squared, and R-Squared Adjusted (Mathworks, 2017) Additionally, the training time was recorded during the phase of the program where the model was trained, and evaluation time was recorded during the phase when the final outputs were generated. The error, the difference between the surrogate model prediction and the actual function output, is calculated for each of these 100,000 input-output pairs. Then, the performances of all surrogate models were evaluated using a data set of 100,000 input-output pairs that were generated according to the Sobol sampling technique. Latin Hypercube Sampling (LHS) Latin Hypercube Sampling (LHS) is a variant of Quasi-MC method, which has been widely used to efciently spread samples into the entire sampling space without any overlapping 17 as shown in Fig. Each surrogate model was trained using these data sets. I find that as long as N, the number of simulations, is large compared with K, the number of variables, Latin hypercube sampling gives an estimator. In Section 3, I derive the asymptotic variance of h, the estimator of Eh(X), based on a Latin hypercube sample. In Sec-tion 2, I describe this procedure. L PEs, N memories and t/sub ij/s is the weights between i and j. XN that they call Latin hypercube sampling. From each challenge function, input-output pairs were generated using the three sampling methods for nine sample sizes. LHS UNIX Library/Standalone uses the Latin Hypercube Sampling method (LHS) to. It is widely used to generate samples that are known as controlled random samples and is often applied in Monte Carlo analysis because it can dramatically reduce the number of simulations needed to achieve accurate results.

These methods were selected as they are shown to sample input space uniformly with limited sample sizes for functions up to ten dimensions ( Dife and Diwekar, 2016). Latin hypercube sampling is a method that can be used to sample random numbers in which samples are distributed evenly over a sample space. Sobol and Halton sequences are both quasi-random low-discrepancy sequences, which seek to distribute samples uniformly across the input space. Then, each of the N partitions is sampled once, and randomly combined. LHS is a stratified sampling technique, and it splits the range of each input variable into N intervals of equal probability, where N is the number of sample points. The sampling methods that were utilized to generate training data from the challenge functions include LHS, Sobol Sequence and Halton Sequence. Eden, in Computer Aided Chemical Engineering, 2018 4 Computational Experiments Proposition 10.1 in the book chapter shows that the extent to which LHS is an improvement depends on the degree to which your function $f$ is additive.Sarah E.

In other words, LHS can never be worse than IID sampling on a sample with one less point.

Why? Well, the report you reference is interesting, but it leaves open the question as to how $\operatorname)$ where $n$ is the sample size (the proof is in: Owen, 1997, Monte Carlo variance of scrambled net quadrature, SIAM Journal of Numerical Analysis 34:1884-1910). excluding very small sample sizes) Latin Hypercube Sampling is always a good idea, it's more a question of 'how good'. This paper proposes extension of Latin Hypercube Sampling (LHS) to avoid the necessity of using intervals with the same probability area where intervals with.

0 kommentar(er)

0 kommentar(er)